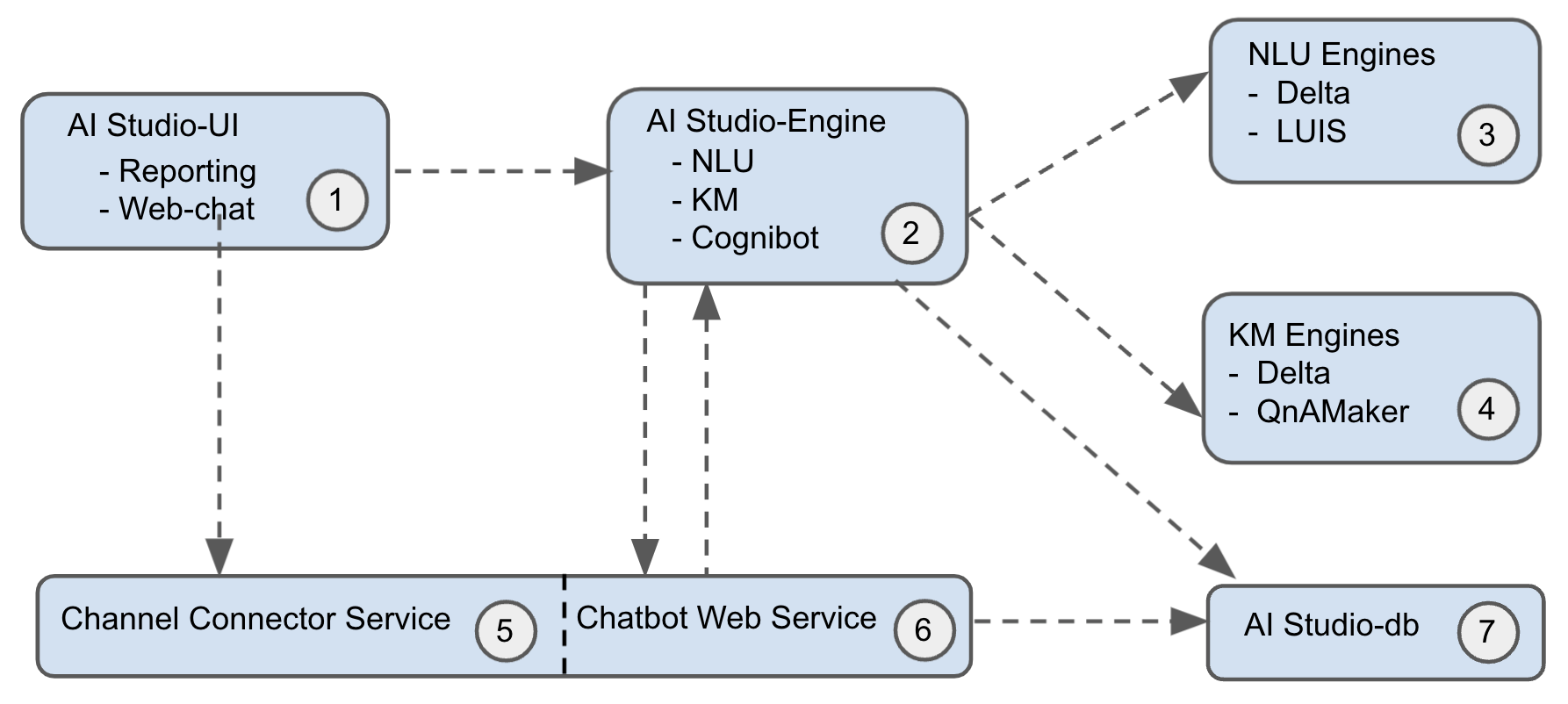

Architecture

AI Studio is a multi-tenant system that supports the creation of three types of projects: NLU, KM, and Cognibot. The cognibot project lets you build a chatbot that can utilize natural language understanding and knowledge article search capabilities of NLU and KM projects respectively. The cognibot project supports connecting to AutomationEdge as a fulfillment engine for your Information Technology Process Automation (ITPA) or Robotic Process Automation (RPA) automation requests.

- AI Studio-UI: It is the administrative console that lets you create three types of projects mentioned above. It also has a dialog designer that lets you build and test chatbot dialogs or conversations without writing a single line of code. It talks to AI Studio-Engine using Representational State Transfer (REST).

- AI Studio-Engine: It is the backend of AI Studio. It uses a relational database as the data store.

- NLU Engine: It gives you the ability to search structured documents. While creating an NLU project in AI Studio, you have to choose the NLU engine. In this release, we only support Conversational Language Understanding(previously LUIS) as the underlying engine.

- KM Engine: It gives you the ability to search unstructured documents. While creating a KM project in AI Studio, you have to choose the KM engine. In this release, we only support Custom Question Answering(previously QnAMaker) as the underlying engine.

- Channel Connector Service: It manages communication between chatbot web service and different chat channels. It relieves the chatbot web service from having to manage communication between different chat channels like Webchat, Microsoft Teams, WhatsApp, etc. which means, the chatbot web service code pretty much becomes channel agnostic.

- Chatbot Web Service: It manages chat discussions, conversation state, etc. It maintains a record of each chat conversation using a relational data store. Additionally, it interacts with AI Studio to talk with the underlying NLU and KM projects.

- AI Studio-DB: PostgresSQL database is generally used by both AI Studio-Engine and Chatbot Webservice. Typically, you create two different databases for these.

On-premise Deployment

Installation section covers on-premise deployment of all the components shown in the above architecture diagram. As we would be using Conversational Language Understanding (CLU) and Custom Question Answering (Custom QA) as NLU and KM engines respectively, you don't need to install them locally as they are available only in the cloud. You can connect to your own CLU app or Custom QA knowledge base by providing appropriate connection details or you can also connect to our resources for getting things up and running quickly.

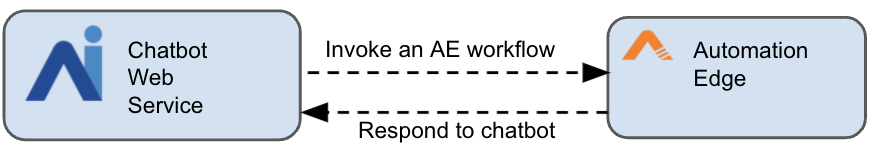

Integration with AutomationEdge

AI Studio Cognibot project uses AutomationEdge for fulfilling the requests originated by users interacting with the chatbot. We have created a hosted AutomationEdge instance with some predefined workflows. So you can avoid setting up an AutomationEdge instance for the trial. This instance https://t4.automationedge.com running inside AWS is managed by us. Workflows that are called from the chatbot typically reply back by making a REST call on the chatbot web service as shown below.

Assuming the chatbot webservice is running on port 3978 on the local machine, its endpoint http://localhost:3978 will not be accessible publicly over the internet. How to resolve this issue and how to integrate the chatbot webservice with MS-Teams as will be covered in the section MS-Teams. You can use ngrok which lets you create tunnels to localhost. With ngrok, tunneled service is given a public end-point. If you run ngrok http 3978 command on the local machine, you will see an end-point similar to https://cdab-116-74-169-47.ngrok.io which will have to be configured in the .env file of chatbot webservice with the key named CHATBOT_URL. One thing to note here is that every time you run ngrok http 3978 command, you are going to get a different public end-point.

If you are running chatbot web service on a machine with public IP address and with incoming traffic to the exposed port on which it is running, you don't need ngrok for AutomationEdge workflow to communicate back to chatbot webservice. But for integrating with MS Teams, you not only need a public end-point but also has to need a valid https certificate. Ngrok gives you that as well.